I recently finished reading Ellen Cushman’s The Cherokee Syllabary, an excellent book on the history and spread of the writing system developed by Sequoyah for the Cherokee tribe. Cushman does a thorough job explaining how the syllabary works as a syllabary, rather than describing it in alphabetic terms. She argues that to explain a syllabary in terms of one-to-one sound-grapheme correspondence (which is often the tact in linguistic work) is already to analyze it in alphabetic terms.

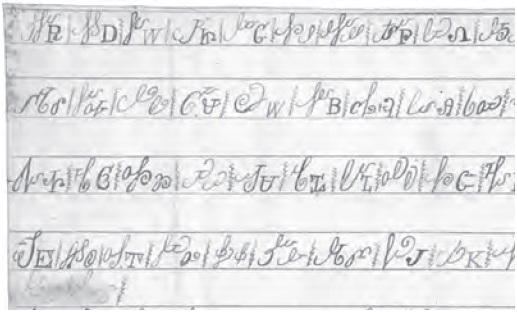

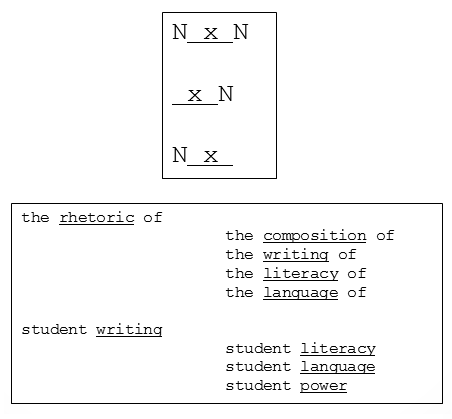

One of Cushman’s central projects in the book is to demonstrate how the Cherokee syllabary—both its structure and graphic representation—grew from Cherokee culture. It was not, she argues, a simple borrowing and re-application of the Roman alphabetic script. Most scholars would disagree with her, including Henry Rogers in Writing Systems: A Linguistic Approach and Steven Roger Fischer in A History of Writing. Fischer claims that “using an English spelling book, [Sequoyah] arbitrarily appointed letters of the alphabet” to correspond with units of sound in Cherokee (287). Cushman counters this claim by pointing out that linguists only make it after looking at the printed form of Cherokee, which, by necessity, remediated Sequoyah’s original syllabary into a more Latinate form. Cushman provides us with pictures of the original syllabary, as well as a new Unicode font that she believes more adequately represents the original style:

Much of Cushman’s book is devoted to showing the connection between Cherokee culture and the syllabary, a connection which obviates the need to assume some sort of alphabetic borrowing.

I’m not at all convinced by this main argument (still lots of Latinate forms up there), but I was quite interested, after reading the book, in another point Cushman makes about what it means to be Native American, both historically and contemporarily. She posits “four pillars of Native peoplehood: language, history, religion, and place” (6). I would argue that language is the most powerful of the four, but Cushman merely claims that the loss of the Cherokee language would “spell the ruin of an integral part of Cherokee identity.”

No doubt it would. And this got me thinking about native language health in general. As regards Cherokee specifically, Cushman writes that “while the Cherokees are one of the largest tribes in the United States, the Cherokee Nation estimates that only a few thousand speak, read, and write the Cherokee language” (6). I checked this statistic and found it to be correct but misleading. Perhaps only a few thousand Cherokees “speak, read, and write” Cherokee, but 16,000 speak the language.

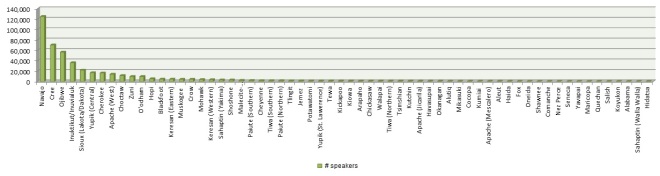

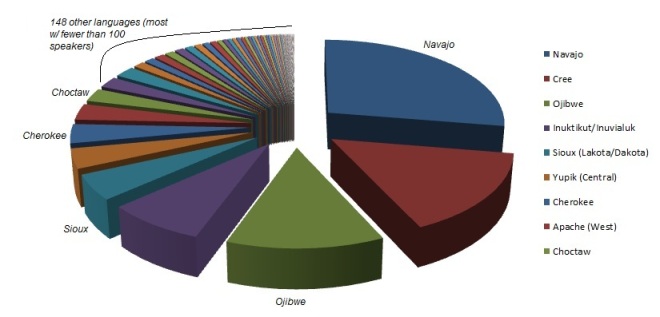

So what about other native languages? Using Ethnologue and the World Atlas of Language Structures, I ranked all native American languages (and a few Canadian languages) by their ‘linguistic health’, measured purely as number of speakers. Here’s a bar chart of native languages with more than 100 speakers. (Click to enlarge.) Already, you can notice the seriously skewed curve that I’ll discuss in a moment . . .

Now, no native language in America (or Canada) is ‘healthy’ compared to English, Spanish, Mandarin, Hindi, or the world’s other dominant languages. Nearly all native American languages are endangered, severely endangered, or extinct. Only one—Navajo—escapes the ‘endangered’ list, but even then, Navajo is lately considered ‘vulnerable’ because the youngest generation is switching to English.

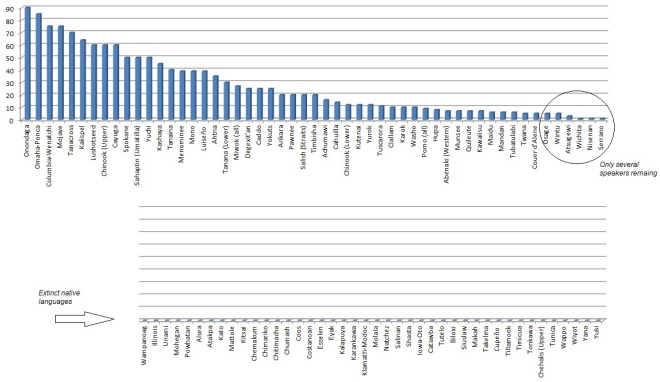

Within this continuum of endangered native languages, however, there exists a highly skewed continuum of linguistic health. There are approx. 115 living languages in America, but only 35 possess more than 1,000 speakers. Only 9 possess more than 10,000 speakers. And only 3 possess more than 50,000 speakers. In other words, the great bulk of living native American languages are in bad shape, and will likely go extinct within the next generation, joining the 41 native languages that already have gone extinct. Here’s the ranking of native languages with fewer than 100 speakers:

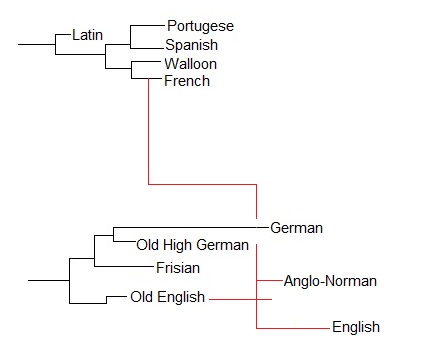

And yet what interests me about this data is not the obvious point about language loss in our post-colonial present. Language loss is the inevitable outcome in the wake of conquest; Old English itself was lost when the Norman French invaded Britain. Rather, what interests me is that, extinction and severe endangerment being the rule, several languages have managed to become glaring exceptions to the rule. Why?

According to my list, there are approximately 454,515 native language speakers in America—and parts of Canada, since I’ve included Cree and Ojibwe, Canada’s healthiest native languages, in my list (see the end of this post for more methodological details). At the start of the colonial era, there were somewhere between 2 and 7 million natives living in what is now the U.S. and Canada, with most of that population inhabiting the U.S. Splitting the difference, we can say there were 4 .5 million native language speakers pre-conquest but only 454,515 today. That’s a nearly 90% reduction in native language speakers over the course of 500 years.

(Note: this is not the same as a reduction in population. There are currently 2.9 million native Americans in the U.S., which, depending on your source, is anywhere from a net gain in population between the 15th and 21st centuries, or a loss of around 50-60% total native population. The comparatively drastic loss in number of native language speakers, however,results from the fact that most native Americans have, both recently and historically, switched to English.)

Speaking of languages, then, not population, it seems as though total annihilation is the most probable outcome for a language after conquest. It seems almost inevitable that a conquered population’s language will eventually become the language of the conqueror. (This is why only 100,000 people speak Irish in Ireland, and why no one speaks an un-Romanized version of English.)

Thus, it’s not surprising that most native languages possess fewer than 1,000 speakers, or that more than half only have between 1 and 100 speakers—i.e., it’s not surprising that more than half of native American languages are practically extinct. If we ignore the nine ‘healthiest’ native languages (the outliers with more than 10,000 speakers), then the total reduction in native language speakers between pre-colonial times and today rapidly approaches 100%.

Which returns us to the interesting thing about this data: the existence of these (comparatively) healthy native American languages. The nine healthiest languages have a total of 368,259 speakers, which translates to 81% of all native language speakers across all tribal languages; and yet these nine languages comprise only 7% of all native languages. In other words, 81% of native language speakers in America and parts of Canada speak only 7% of the existing native languages (less than 4% of all native languages, if we factor extinct languages and all Canadian languages into the equation).

I imagine that if we look at any area on the globe where conquered indigenous languages jostle beside more powerful indigenous or colonial languages, we’ll find similar data showing that, even amongst the less powerful languages, there remains a very skewed hierarchy of linguistic health. One can’t help wondering what’s at work here . . .

I enjoy compiling large sets of data like this because certain questions just don’t come into sharp focus until we compile the data. I think most rhet/comp scholars, like Cushman, have a general understanding that certain native American languages are in better shape than others; however, until we take the time to work with the actual data set (all living and extinct native American languages), we won’t discover this skewed pattern within it, and we won’t be able to formulate what, to my mind, are highly interesting and relevant questions: why and how have certain languages managed to survive and (comparatively) thrive while most other native languages have gone extinct or dwindled to only a few hundred speakers? What did these languages and tribal groups have going for them that the others didn’t? Was it a purely linguistic advantage, a purely geopolitical advantage, or a combination of both?

In part, we can read Cushman’s book as an answer to these unformulated questions. While Cushman spends a lot of time (rightly) describing language attrition among contemporary Cherokees, she perhaps doesn’t realize that Cherokee is doing a hell of a lot better than most other native languages. Although her book presents something of a contrast between the language’s current weakened state and the syllabary’s historic role in uniting and strengthening the Cherokee against further Western encroachment, we can see, in light of this data, how the contrast is perhaps instead a partial explanation for the fact that Cherokee isn’t as unhealthy as the vast majority of native American languages. In other words, the existence of the Cherokee syllabary may very well be one of the reasons why Cherokee exists on the healthier side of living native languages, why Cherokee isn’t entirely extinct.

Stylizing Sequoyah’s thought process, Cushman writes, “If whites could have a writing system that so benefited them, filling them with self-respect and earning the respect of others, then Cherokees could have a writing system with all this power as well” (35). After compiling statistics on native language health, I can see that Cushman, in focusing on current language attrition among the Cherokee, misses a deeper exploration of a compelling possibility: that the syllabary’s power not only bolstered the Cherokee people but also perhaps played a part in saving the Cherokee language itself from total extinction. The syllabary’s strengthening role was not an historic phenomenon; without it, perhaps there wouldn’t be a Cherokee language today at all.

This is a good example of why I think digital tools and databases have a lot to offer the humanities: without them, patterns go unnoticed and questions go unasked.

Methodological notes: I couldn’t rank linguistic health among native languages without first deciding what “counted” as a native language and what was simply a dialect of a language. This language/dialect issue is sometimes difficult to navigate, and Ethnologue typically gives each dialect its own language code. But such granularity is misleading; Madrid Spanish and Buenos Aires Spanish are different in many respects, but speakers in both places can understand one another because they are still, despite the differences, speaking Spanish.

Mutual intelligibility between speaker populations is the general rule for differentiating a dialect from a separate language, and I’ve done my best to follow that rule. For example, I’ve counted Ojibwe as a single language, even though Ojibwe is in fact a continuum of dialects; on the other hand, I’ve divided the Miwok continuum into different languages (Sierra Miwok, Plains Miwok, et cetera). Speakers of the Miwok languages, while closely related, have difficulty understanding one another in a way that speakers of Ojibwe dialects do not. So, Ojibwe is a single language, while the Miwok ‘dialects’ should really be considered separate languages.

However, none of this made huge differences in the ranking. Some might quibble with my grouping of all Ojibwe or Cree dialects into a single language, but even had I taken out the dialects that aren’t perfectly intelligible with the others, each of these languages still would have retained tens of thousands of speakers. Conversely, even had I counted all Miwok speakers as a single linguistic group, Miwok would still have fewer than 50 speakers.

Finally, when compiling statistics on numbers of speakers for each language, I used field linguists’ counts when they were available, rather than census counts, which tend to err on the side of liberality. (E.g., according to the U.S. census, there are over 150,000 Navajo speakers, but most linguists consider this an unlikely number.)